Home

Deep Learning of Biomimetic Sensorimotor Control for Biomechanical Human Animation

Masaki Nakada, Tao Zhou, Honglin Chen, Tomer Weiss, and Demetri Terzopoulos

ACM Transactions on Graphics (SIGGRAPH) , 2018

Abstract

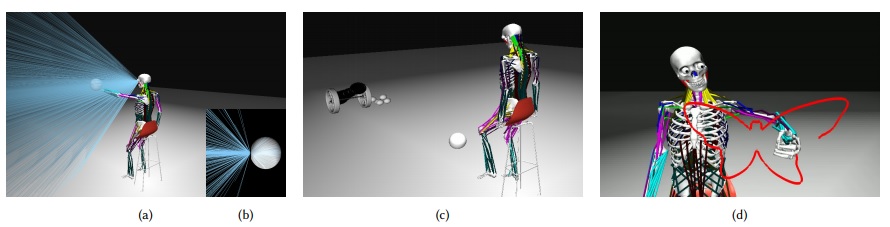

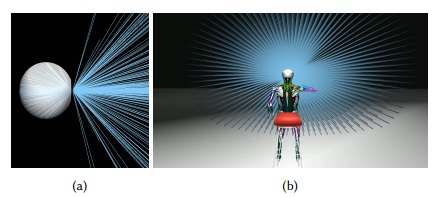

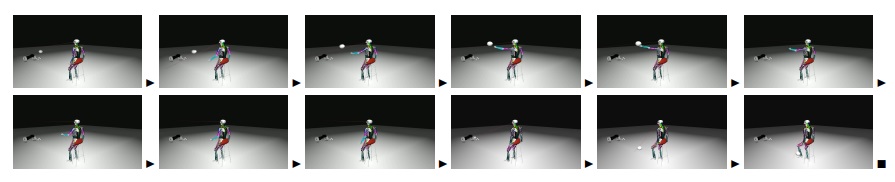

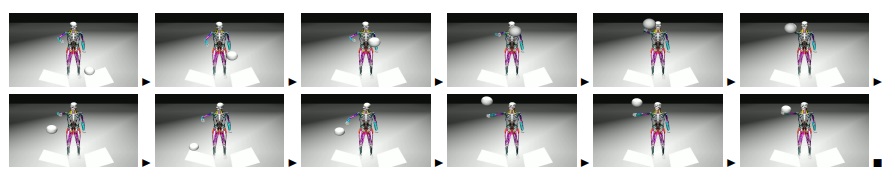

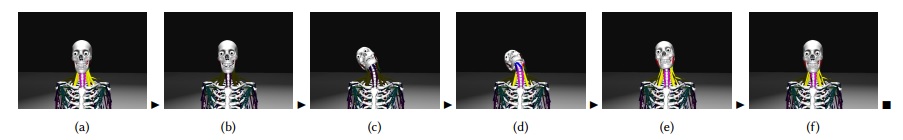

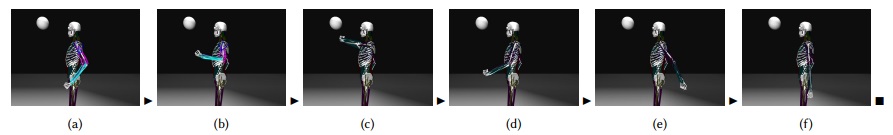

We introduce a biomimetic framework for human sensorimotor control, which features a biomechanically simulated human musculoskeletal model actuated by numerous muscles, with eyes whose retinas have nonuniformly distributed photoreceptors. The virtual human's sensorimotor control system comprises 20 trained deep neural networks (DNNs), half constituting the neuromuscular motor subsystem, while the other half compose the visual sensory subsystem. Directly from the photoreceptor responses, 2 vision DNNs drive eye and head movements, while 8 vision DNNs extract visual information required to direct arm and leg actions. Ten DNNs achieve neuromuscular control---2 DNNs control the 216 neck muscles that actuate the cervicocephalic musculoskeletal complex to produce natural head movements, and 2 DNNs control each limb; i.e., the 29 muscles of each arm and 39 muscles of each leg. By synthesizing its own training data, our virtual human automatically learns efficient, online, active visuomotor control of its eyes, head, and limbs in order to perform nontrivial tasks involving the foveation and visual pursuit of target objects coupled with visually-guided limb-reaching actions to intercept the moving targets, as well as to carry out drawing and writing tasks.

Images